When Shopify’s CEO Tobi Lütke encouraged everyone at his company to make AI tools a natural part of their work, it wasn’t a sudden shift. It came after years of careful preparation—building the right culture, legal framework, and infrastructure. While smaller companies don’t have Shopify’s scale or budget, they can still learn a lot from how Shopify approached AI adoption and adapt it to their own realities.

Start by assembling a cross-functional pilot team of five to seven people—a sales rep, someone from customer support, perhaps an engineer or operations lead. Give this group a modest budget, around $5,000, and 30 days to demonstrate whether AI can help solve real problems. Set clear goals upfront: maybe cut the time it takes to respond to customer emails by 20%, automate parts of sales prospect research to save two hours a week, or reduce repetitive manual data entry in operations by 30%. This focus helps avoid chasing shiny tools without a real payoff.

You don’t need to build your own AI platform or hire data scientists to get started. Many cloud AI services today offer pay-as-you-go pricing, so you can experiment without huge upfront investments. For example, a small customer support team might subscribe to ChatGPT for a few hundred dollars a month and connect it to their helpdesk software to draft faster, more personalized email replies. A sales team could create simple automations with no-code tools like Zapier that pull prospect data from LinkedIn, run it through an AI to generate email drafts, and send them automatically. These kinds of workflows often take less than a week to set up and can improve efficiency by 30% or more.

As you experiment, keep a close eye on costs. API calls add up quickly, and a small team making thousands of requests each month might see unexpected bills over $1,000 if you’re not careful. Make sure to monitor usage and set sensible limits during your pilot.

Using AI responsibly means setting some basic ground rules early. Include someone from legal or compliance in your pilot to help create simple guidelines. For instance, never feed sensitive or personally identifiable customer information into AI tools unless it’s properly masked or anonymized. Also, require human review of AI-generated responses before sending them out, at least during your early adoption phase. This “human-in-the-loop” approach catches errors and builds trust.

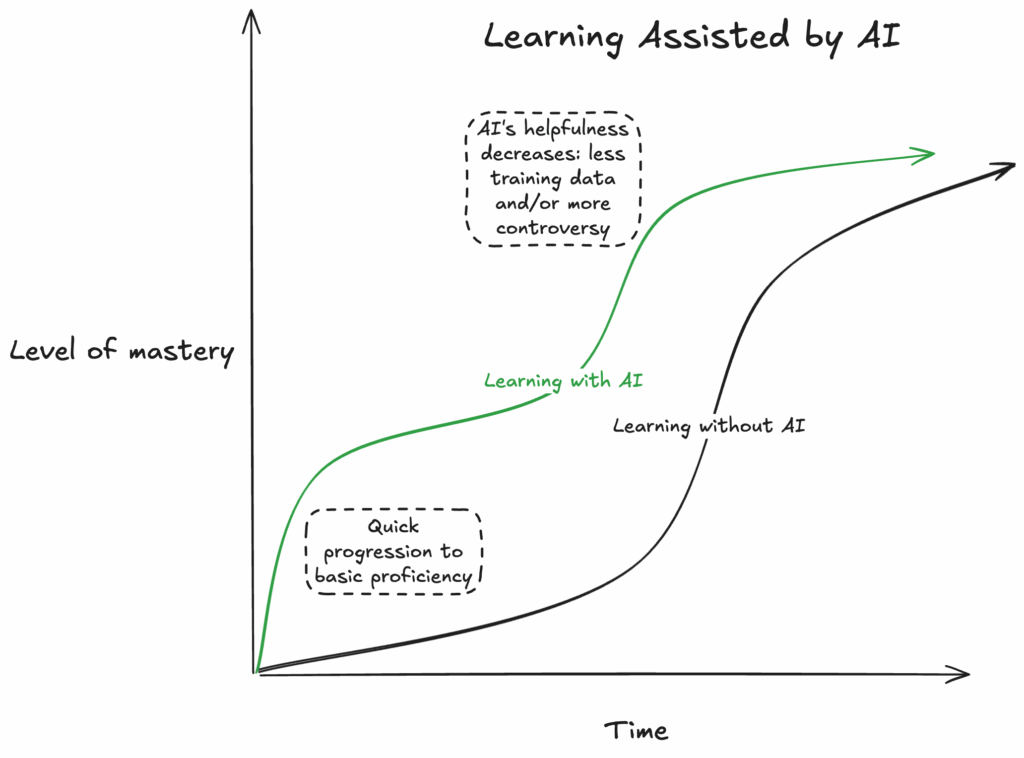

Training people to use AI effectively is just as important as the tools themselves. Instead of long, formal classes, offer hands-on workshops where your teams can try AI tools on their real daily tasks. Encourage everyone to share what worked and what didn’t, and identify “AI champions” who can help their teammates navigate challenges. When managers and leaders openly use AI themselves and discuss its benefits and limitations, it sets a powerful example that using AI is part of how work happens now.

Tracking results doesn’t require fancy analytics. A simple Google Sheet updated weekly can track how many AI requests team members make, estimate time saved on tasks, and note changes in customer satisfaction. If the pilot isn’t delivering on its goals after 30 days, pause and rethink before expanding.

Keep in mind common pitfalls along the way. Don’t rush to automate complex workflows without testing—early AI outputs can be inaccurate or biased. Don’t assume AI will replace deep expertise; it’s a tool to augment human judgment, not a substitute. And don’t overlook data privacy—sending customer information to third-party AI providers without proper agreements can lead to compliance headaches.

Shopify’s success came from building trust with their legal teams, investing in infrastructure that made AI accessible, and carefully measuring how AI use related to better work outcomes. While smaller companies might not create internal AI proxies or sophisticated dashboards, they can still embrace that spirit: enable access, encourage experimentation, and measure what matters.

By starting with a focused pilot, using affordable tools, setting simple but clear usage rules, training through hands-on practice, and watching the results carefully, your company can unlock AI’s potential without unnecessary risk or wasted effort.