Some content on this blog is developed with AI assistance tools like Claude.

In an age where technological advancement accelerates by the day, many of us find ourselves at a crossroads: How do we embrace innovation while staying true to our core values? How can we leverage new tools without losing sight of what makes our work—and our lives—meaningful? This question has become particularly pressing as artificial intelligence transforms industries, reshapes professional landscapes, and challenges our understanding of creativity and productivity.

Drawing from insights across multiple disciplines, this guide offers a framework for navigating this complex terrain. Whether you’re a professional looking to stay relevant, a content creator seeking to stand out, or simply someone trying to make sense of rapid change, these principles can help you chart a course toward purposeful growth that balances technological adoption with human connection and impact.

Understanding Technology as an Enhancement Tool

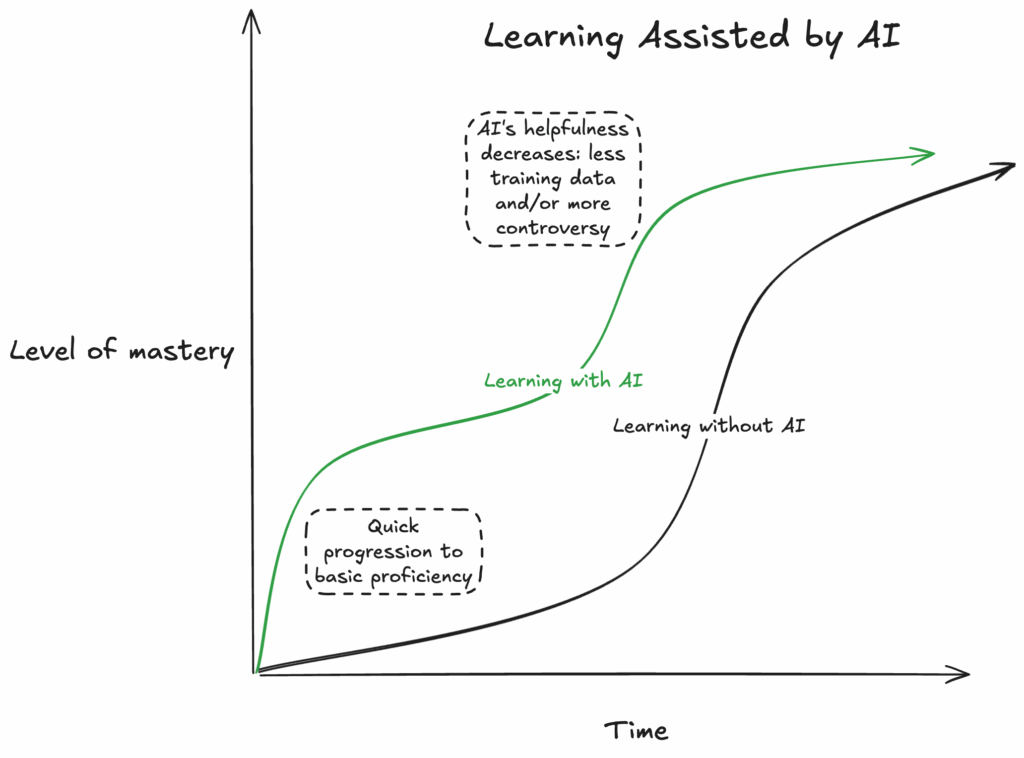

The narrative around technology—particularly AI—often centers on replacement and obsolescence. Headlines warn of jobs being automated away, creative work being devalued, and human skills becoming redundant. But this perspective misses a crucial insight: technology’s greatest value comes not from replacing human effort but from enhancing it.

From Replacement to Augmentation

Consider the case of Rust Communications, a media company that found success not by replacing journalists with AI but by using AI to enhance their work. By training their systems on historical archives, they gave reporters access to deeper context and institutional knowledge, allowing them to focus on what humans do best: asking insightful questions, building relationships with sources, and applying critical judgment to complex situations.

This illustrates a fundamental shift in thinking: rather than asking, “What can technology do instead of me?” the more productive question becomes, “How can technology help me do what I do, better?”

Practical Implementation

This mindset shift opens up possibilities across virtually any field:

- In creative work: AI tools can handle routine formatting tasks, suggest alternative approaches, or help identify blind spots in your thinking—freeing you to focus on the aspects that require human judgment and creative vision.

- In knowledge work: Automated systems can gather and organize information, track patterns in data, or draft preliminary analyses—allowing you to devote more time to synthesis, strategy, and communication.

- In service roles: Digital tools can manage scheduling, documentation, and routine follow-ups—creating more space for meaningful human interaction and personalized attention.

The key is to approach technology adoption strategically, identifying specific pain points or constraints in your work and targeting those areas for enhancement. This requires an honest assessment of where you currently spend time on low-value tasks that could be automated or augmented, and where your unique human capabilities add the most distinctive value.

Developing a Strategic Approach to Content and Information

As content proliferates and attention becomes increasingly scarce, thoughtful approaches to creating, organizing, and consuming information become critical professional and personal skills.

The Power of Diversification

Data from publishing industries shows a clear trend: organizations that diversify their content strategies across formats and channels consistently outperform those that remain narrowly focused. This doesn’t mean pursuing every platform or trend, but rather thoughtfully expanding your approach based on audience needs and behavior.

Consider developing a content ecosystem that might include:

- Long-form written content for depth and authority

- Audio formats like podcasts for accessibility and multitasking audiences

- Visual elements that explain complex concepts efficiently

- Interactive components that increase engagement and retention

The goal isn’t to create more content but to create more effective content by matching format to function and audience preference.

The Value of Structure and Categorization

As AI systems play an increasingly important role in content discovery and recommendation, structure becomes as important as substance. Content that is meticulously categorized, clearly structured, and designed with specific audience segments in mind will receive preferential treatment in algorithmic ecosystems.

Practical steps include:

- Developing consistent taxonomies for your content or knowledge base

- Creating clear information hierarchies that signal importance and relationships

- Tagging content with relevant metadata that helps systems understand context and relevance

- Structuring information to appeal to specific audience segments with definable characteristics

This approach benefits not only discovery but also your own content development process, as it forces clarity about purpose, audience, and context.

Cultivating Media Literacy

The flip side of strategic content creation is purposeful consumption. As information sources multiply and traditional gatekeepers lose influence, the ability to evaluate sources critically becomes an essential skill.

Developing robust media literacy involves:

- Diversifying news sources across political perspectives and business models

- Understanding the business models that fund different information sources

- Recognizing common patterns of misinformation and distortion

- Supporting quality journalism through subscriptions and engagement

This isn’t merely about avoiding misinformation; it’s about developing a rich, nuanced understanding of complex issues by integrating multiple perspectives and evaluating claims against evidence.

Leveraging Data and Knowledge Assets

Every individual and organization possesses unique information assets—whether formal archives, institutional knowledge, or accumulated experience. In a knowledge economy, the ability to identify, organize, and leverage these assets becomes a significant competitive advantage.

Mining Archives for Value

Hidden within many organizations are treasure troves of historical data, past projects, customer interactions, and institutional knowledge. These archives often contain valuable insights that can inform current work, reveal patterns, and provide context that newer team members lack.

The process of activating these assets typically involves:

- Systematic assessment of what historical information exists

- Strategic digitization of the most valuable materials

- Thoughtful organization using consistent metadata and taxonomies

- Integration with current workflows through searchable databases or knowledge management systems

Individual professionals can apply similar principles to personal knowledge management, creating systems that help you retain and leverage your accumulated learning and experience.

Building First-Party Data Systems

Organizations that collect, analyze, and apply proprietary data consistently outperform those that rely primarily on third-party information. This “first-party data”—information gathered directly from your audience, customers, or operations—provides unique insights that can drive decisions, improve offerings, and create additional value through partnerships.

Effective first-party data strategies include:

- Identifying the most valuable data points for your specific context

- Creating ethical collection mechanisms that provide clear value exchanges

- Developing analysis capabilities that transform raw data into actionable insights

- Establishing governance frameworks that protect privacy while enabling innovation

Even individuals and small teams can benefit from systematic approaches to gathering feedback, tracking results, and analyzing patterns in their work and audience responses.

Applying Sophisticated Decision Models

As data accumulates and contexts become more complex, simple intuitive decision-making often proves inadequate. More sophisticated approaches—like stochastic models that account for uncertainty and variation—can significantly improve outcomes in situations ranging from financial planning to product development.

While the mathematical details of these models can be complex, the underlying principles are accessible:

- Embrace probability rather than certainty in your planning

- Consider multiple potential scenarios rather than single forecasts

- Account for both expected outcomes and variations

- Test decisions against potential extremes not just average cases

These approaches help create more robust strategies that can weather unpredictable events and capture upside potential while mitigating downside risks.

Aligning Work with Purpose and Community

Perhaps the most important theme emerging from diverse sources is the centrality of purpose and community connection to sustainable success and satisfaction. Technical skills and technological adoption matter, but their ultimate value depends on how they connect to human needs and values.

Balancing Innovation and Values

Organizations and individuals that successfully navigate technological change typically maintain a clear sense of core values and purpose while evolving their methods and tools. This doesn’t mean resisting change, but rather ensuring that technological adoption serves rather than subverts fundamental principles.

The process involves regular reflection on questions like:

- How does this new approach or tool align with our core values?

- Does this innovation strengthen or weaken our connections to the communities we serve?

- Are we adopting technology to enhance our core purpose or simply because it’s available?

- What guardrails need to be in place to ensure technology serves our values?

This reflective process helps prevent the common pattern where means (technology, metrics, processes) gradually displace ends (purpose, impact, values) as the focus of attention and decision-making.

Assessing Growth for Impact

A similar rebalancing applies to personal and professional development. The self-improvement industry often promotes growth for its own sake—more skills, more productivity, more achievement. But research consistently shows that lasting satisfaction comes from growth that connects to something beyond self-interest.

Consider periodically auditing your development activities with questions like:

- Is this growth path helping me contribute more effectively to others?

- Am I developing capabilities that address meaningful needs in my community or field?

- Does my definition of success include positive impact beyond personal achievement?

- Are my learning priorities aligned with the problems that most need solving?

This doesn’t mean abandoning personal ambition or achievement, but rather connecting it to broader purpose and impact.

Starting Local

While global problems often command the most attention, the most sustainable impact typically starts close to home. The philosopher Mòzǐ captured this principle simply: “Does it benefit people? Then do it. Does it not benefit people? Then stop.”

This local focus might involve:

- Supporting immediate family and community needs through childcare, elder support, or neighborhood organization

- Applying professional skills to local challenges through pro bono work or community involvement

- Building relationships that strengthen social fabric in your immediate environment

- Creating local systems that reduce dependency on distant supply chains

These local connections not only create immediate benefit but also build the relationships and resilience that sustain longer-term efforts and larger-scale impact.

Integrating Technology and Humanity: A Balanced Path Forward

The themes explored above converge on a central insight: the most successful approaches to contemporary challenges integrate technological capability with human purpose. Neither luddite resistance nor uncritical techno-optimism serves us well; instead, we need thoughtful integration that leverages technology’s power while preserving human agency and values.

This balanced approach involves:

- Selective adoption of technologies that enhance your distinctive capabilities

- Strategic organization of content and knowledge to leverage both human and machine intelligence

- Purposeful collection and analysis of data that informs meaningful decisions

- Regular reflection on how technological tools align with core values and purpose

- Consistent connection of personal and professional growth to community benefit

No single formula applies to every situation, but these principles provide a framework for navigating the complex relationship between technological advancement and human flourishing.

The organizations and individuals who thrive in coming years will likely be those who master this integration—leveraging AI and other advanced technologies not as replacements for human judgment and creativity, but as amplifiers of human capacity to solve problems, create value, and build meaningful connections.

In a world increasingly shaped by algorithms and automation, the distinctive value of human judgment, creativity, and purpose only grows more essential. By approaching technological change with this balanced perspective, we can build futures that harness innovation’s power while remaining true to the values and connections that give our work—and our lives—meaning.

What steps will you take to implement this balanced approach in your own work and life?