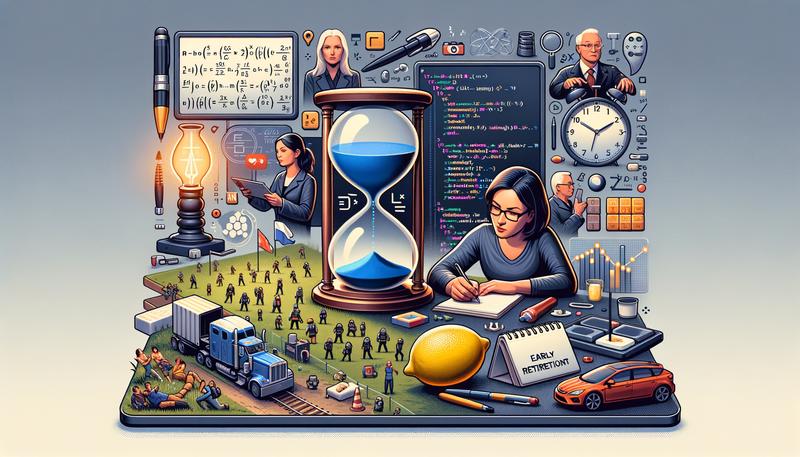

Hey there! This post shares an exciting mix of topics – from cracking the Markov equation and thoughts on early retirement, to coding handwriting in JavaScript and organizing epic games of Capture the Flag. You’ll also get insights on Google’s code review process, powerful lessons from a mentor, and even tips on keeping leftover food fresh. Don’t miss the deep dive into speculative decoding for AI enthusiasts!

- Markov unchained: Ashleigh Wilcox looks for integer solutions to the Markov equation

- The Ultimate Life Coach: – Almost nineteen years into early retirement now, I’ve come to realize that the complete freedom of this lifestyle can be a double-edged sword. Yo

- 30:

- It’s Hard for Me to Live with Me: A Memoir: #promo A powerful memoir from the University of Kentucky basketball legend, NBA veteran, and social media influencer about his recovery from addiction.

- What should a beginner developer learn if they were to start today?: Where should new developers start today? What should they learn to set themselves up for a successful career? Scott Hanselman recommends they become T-shaped developers.

- Coding my Handwriting: Coding my handwriting in Javascript – how I did it and what I’m doing with it.

- I hosted a spectacular 20-acre game of Capture the Flag [#61]: Many players said afterward: “This was the best game of Capture the Flag, like… EVER.”

- The Digital Antiquarian:

- The Lunacy of Artemis:

- How Google does code review: Google has two internal code review tools: Critique, which is used by the majority of engineers, and Gerrit, which is open-sourced and continues to be used by public-facing projects. Here’s how they work.

- Thinking out loud about 2nd-gen Email:

- A Hitchhiker’s Guide to Speculative Decoding: Speculative decoding is an optimization technique for inference that makes educated guesses about future tokens while generating the current token, all within a single forward pass. It incorporates a verification mechanism to ensure the correctness of these speculated tokens, thereby guaranteeing that the overall output of speculative decoding is identical to that of vanilla decoding. Optimizing the cost of inference of large language models (LLMs) is arguably one of the most critical factors in reducing the cost of generative AI and increasing its adoption. Towards this goal, various inference optimization techniques are available, including custom kernels, dynamic batching of input requests, and quantization of large models.

- 5 Powerful Lessons From My Opinionated Mentor: Lessons on promotion, relationship-building, and goal-setting

- How to store half a lemon – and 17 other ways to keep leftover food fresh: What should you do with opened tins, cut avocados and egg yolks that weren’t required in your recipe? Chefs and food experts tell all

- The 15 Very Best Bedside Lights: The best bedside light is the Hay Matin lamp, which has a light-filtering pleated cotton shade and three brightness levels.